Archive for the 'processing' Category

This is a video of two people playing a video game I made using Processing last May. Each player sees a silhouette of themselves on screen whenever they move (their silhouette disappears when they are still). The player also sees a silhouette of the other player. The object of the game is to collect the blue balls while avoiding the red balls to achieve a high score. Balls can only be collected when both players’ silhouettes overlap each other and a ball, so the two players must work together. Each blue ball collected is worth one point while each red ball is worth one negative point.

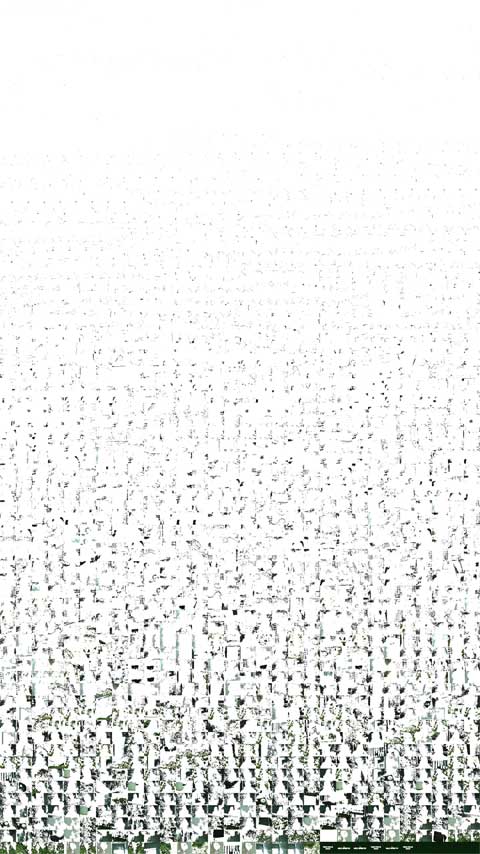

Grey/Green

The first 35 minutes of Grey Gardens arranged by green.

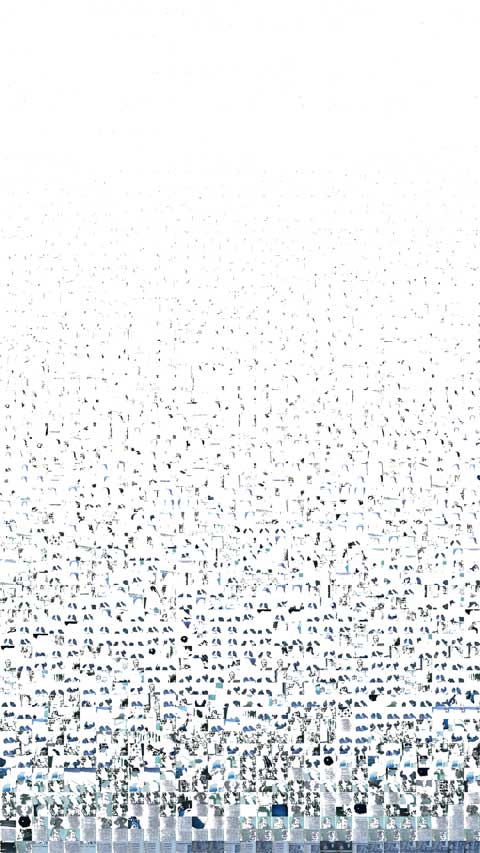

Grey/Blue

The first 35 minutes of Grey Gardens arranged by blue.

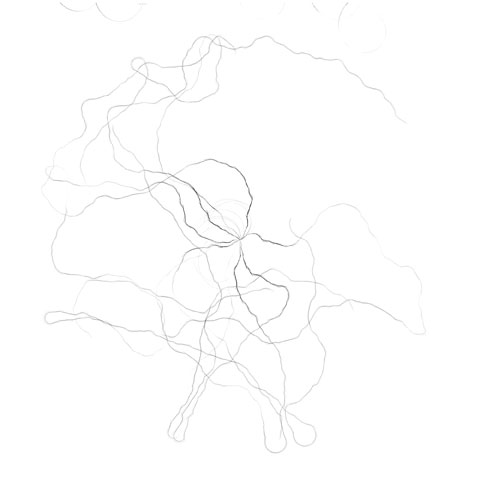

Nik and I created this mobile sensor that broadcasts light levels and accelerometer data to an online database via text message. The above visualization was created by running around for five minutes. Light levels are represented by the opacity of blue and faster changes in tilt (when one is running with the sensor in hand, for instance) are represented by longer lines.

We are currently working on a proper write up that will include code and schematics.

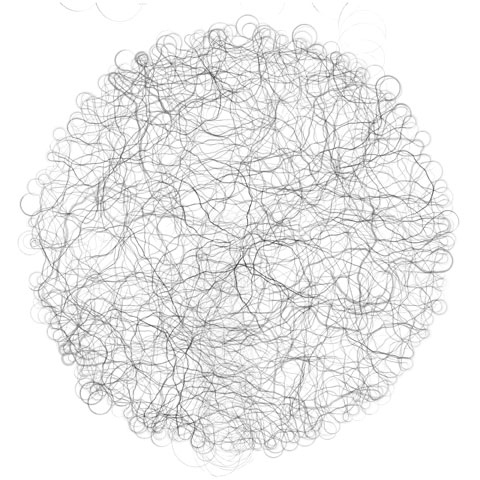

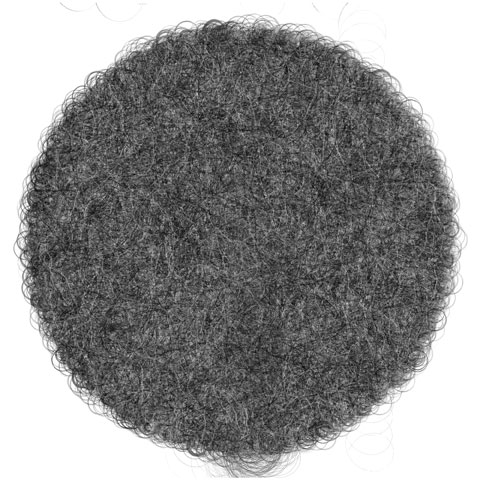

Nik Hanselmann and I installed a sensor in the Sesnon Gallery that collects environmental data (light level, sound level, proximity and temperature) from the space and uploads them to an online database every two seconds. We developed software that creates a generative drawing that changes with the data over time. Above are screenshots of the drawings at different points in time.

We exhibited the live generative drawing in the Porter Faculty Gallery and the DANM Lounge. An online version can be viewed here: http://transmogrify.me (let it run for a few hours for a rich, complex image).